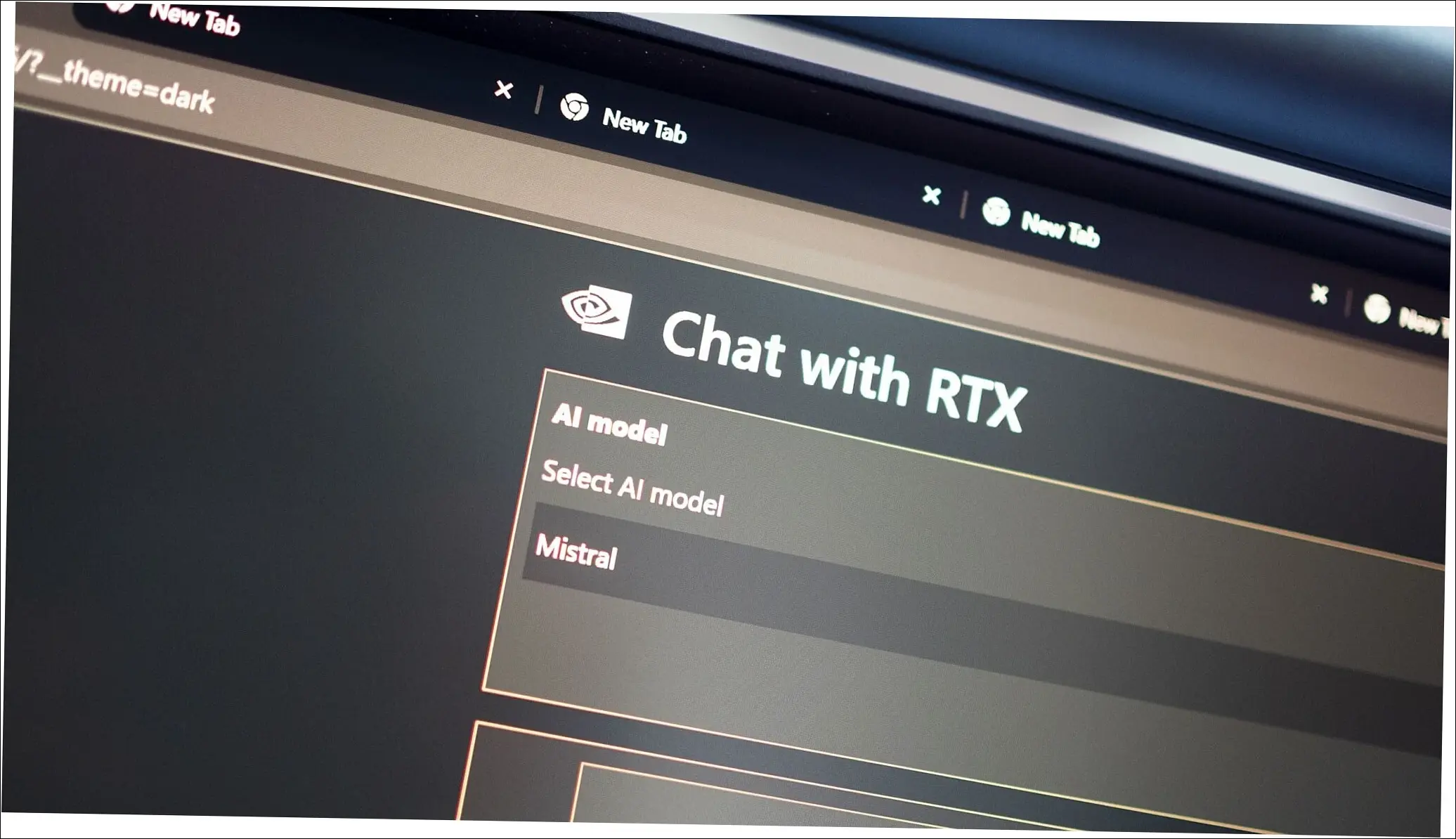

Artificial Intelligence (AI) has been making waves in the tech world ever since it was introduced by Chat GPT followed by Google Gemini and Microsoft Copilot. One company at the forefront of this revolution is chip-developing ‘Nvidia’, a new entrant. Recently it has unveiled a new offline GPT like feature called “Chat With RTX” which allows users to create personalized AI chatbots that run directly on their personal computers without connecting to the internet.

In this article, we try to provide a comprehensive understanding of Nvidia’s Chat with RTX and explore innovative AI technology from its unique features to the benefits it offers users.

Nvidia’s Chat with RTX is an AI-powered feature that allows users to create their own personal AI assistant. Unlike traditional AI chatbots, which are hosted in the cloud, Nvidia’s Chat with RTX works offline on users’ computers.

In the AI world, this feature is touted to be revolutionary. Until now, generative AI chatbots like ChatGPT, Gemini, and Copilot were primarily cloud-based, running on centralized servers powered by Nvidia’s graphics processing units (GPUs). With Chat with RTX, the AI functionality is integrated directly into the user’s computer. This not only enhances privacy but also reduces latency, delivering faster response times.

Table of Contents

ToggleNvidia’s Chat With RTX: The Tech Behind It

Among the advanced AI technologies used in Chat with RTX is retrieval-augmented generation (RAG), a system that preprocesses and optimizes a large language model’s output before generating responses to users, as well as Nvidia’s TensorRT-LLM machine learning software. These two tools make it possible for Chat with RTX to access and use various sources of information including files stored locally on the user’s PC.

These apps can run the generative AI on the GPU’s performance locally. This means that to fully utilize this functionality, users require systems that have been equipped with the GeForce RTX 30 Series GPU or later versions such as the newly launched RTX 2000 Ada Generation GPU. Moreover, their device should have at least sixteen gigabytes of video random access memory (VRAM).

Key Features of Nvidia’s Chat with RTX

Customization

One of the advantages of Nvidia’s Chat with RTX is that it allows a person to customize their chatbot at the highest levels. Users have the option to customize their personal AI chatbot by deciding what content it has to access from the device to generate responses.

Privacy

Chat with RTX also ensures that user privacy is maintained by hosting all conversations of the user on the device itself. It means, no other person or organization can access data of the conversation between the user and the AI Chatbot.

This AI tool operates at faster speeds as compared to cloud-based systems which have inherent time delays. Moreover, it uses RAG methodology to enrich its basic knowledge with more data from different sources thereby providing an extensive pool of information on which responses can be based.

How to Use Chat With RTX

It is pretty much user-friendly to use. Once it is downloaded and installed on your computer, it works similarly to online chatbots like Chat GPT and Gemini. It works with various input formats including PDFs, Word Documents, text, and XML. The users can also provide the URL of a YouTube video or a playlist, the chatbot will then load the transcriptions of the videos.

Make sure that your device needs to be running at least Windows 10 or Windows 11 operating system and paired with Nvidia’s GPU drivers installed. The system requirements are given below.

System Requirements and Compatibility

| Platform | Windows (Presently Not Available On Mac OS) |

| GPU | NVIDIA GeForce™ RTX 30 or 40 Series GPU or NVIDIA RTX™ Ampere or Ada Generation GPU with at least 8GB of VRAM |

| RAM | 16GB or Greater |

| OS | Windows 11 |

| Driver | 535.11 or Later |

| File Size | 35 GB |

Where To Download and Install?

Developer Opportunities with Nvidia’s Chat With RTX

For developers who are interested in testing the Chat with RTX chatbot, Nvidia is providing exclusive access via the TensorRT-LLM RAG reference project on GitHub. The company is also hosting a Generative AI on Nvidia RTX contest, developers across the world can participate and get a chance to win exciting prizes, including a GeForce RTX 4090 GPU and an invitation to the 2024 Nvidia GTC conference.

The Impact of Nvidia’s Chat With RTX on the AI Landscape

Nvidia’s Chat with RTX is, therefore, a pivot in AI landscape. Consequently, they are repositioning themselves as a software platform for personal computers from the cloud and data centers as this step offers privacy, flexibility, and generative AI applications performance.

Developers’ adoption is therefore critical for Nvidia in this journey. The more developers use and test their platform, the better chance it has to emerge as a winner in the AI race against Chat GPT and Google’s Gemini.

Final Words

The Nvidia’s Chat with RTX is an exciting AI development. It brings the power of AI to the user’s device directly, providing an unprecedented level of customization, privacy and performance.

But despite being in its infancy, the technology has enormous potential. As more and more developers work on it, it gets advanced and improves further.

Nvidia’s Chat with RTX is one of those technologies that anyone who is a developer or tech enthusiast following trends in AI cannot ignore.

Additional Information

Remember though that Nvidia’s Chat with RTX technology behind it is complex yet using it does not have to be so. Through a friendly interface and instructions that are easy to understand, you could enjoy the benefits of having a personal AI chatbot on your device. So why wait? Try out Nvidia’s Chat with RTX and feel today as if you are already living in tomorrow – there will not be any disappointment!

For more reads do visit The North Ink (https://thenorthink.com)